Introduction

Stolen secrets and credentials are one of the most common ways for attackers to move laterally and maintain persistence in cloud environments.

Modern cloud deployments employ secrets management systems such as KMS to protect key materials at rest and avoid leaking keys or credentials in source code or other build artifacts. However, secrets are unprotected at runtime, so any vulnerability or compromise of a service could lead to credential theft.

In this blog post, we introduce credential tokenization to protect secrets at runtime, introduce separation of duties, and reduce the credential rotation burden.

The problem

Third-party non-human identities and machine credentials such as API keys, access tokens, and OAuth 2.0 client credentials have three key challenges to address: - Absence of lifecycle management: no standardized way to manage the provisioning and de-provisioning of these identities - Lack of security protections: It’s not possible to enforce security mitigations such as step-up authentication and MFA on NHI due to their nature as non-interactive credentials - Complex remediation: Revoking or rotating these credentials is often slow and cross-functional because it can result in downtime

Further, these credentials are fully exposed at runtime even in the presence of a secret manager because the application can access the key material or secret directly.

Our approach

Similar to the Fly.io security team, we have adopted a paradigm we call Credential Tokenization. The idea is similar to what payment processors do with credit card numbers: minimize exposure and replace the card number with a token.

The way we do this is through a Gate plugin, specifically, Gate injects secrets on outgoing requests and the application itself never touches key material.

Benefits

Our approach provides several benefits:

-

Reduced Key Material Exposure: By keeping secrets out of your application code and configuration files, you reduce the risk of accidental exposure or leaks. Further, even if the application is compromised that won’t lead to secret leakage.

-

Enforce fine-grained access control: Gate’s Tokenizer plugin can be combined with our OPA or OAuth 2.0 plugins to enforce fine-grained access control policies for secret retrieval and injection, especially useful when the secret itself doesn’t have RBAC/roles restriction.

-

Separation of duty and easier rotation: Developers don’t have to worry about credentials lifecycle and management. Credentials can be rotated without downtime.

Example

Topology

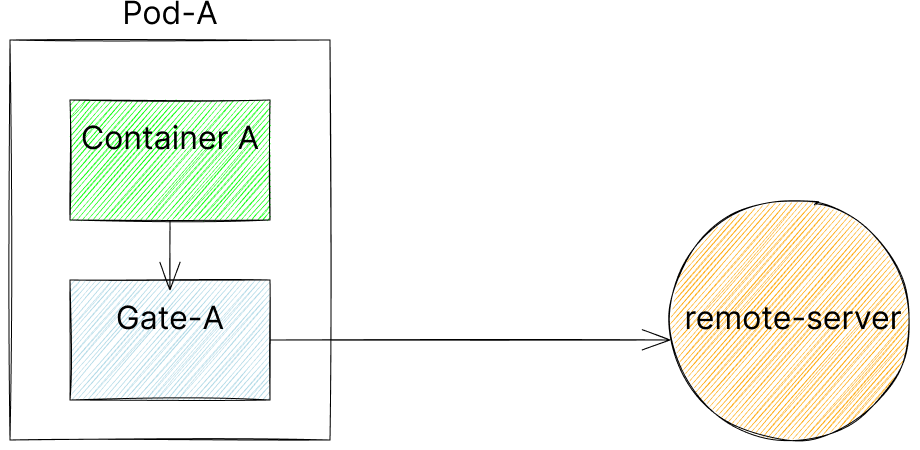

Gate can be deployed in many different topologies: as a sidecar, as an external authorizer, a lambda authorizer, and more. For simplicity in this example we’ll assume a sidecar deployment, that looks like the picture below:

Workflow

Here’s a high-level overview of how credential tokenization works with SlashID and Gate:

-

Secret Creation: Use the secret generator utility to create a secret with the desired configuration. The utility allows you to specify the secret engine (e.g., SlashID, AWS Secrets Manager, GCP Secret Manager), the injection method (e.g., header injection, HMAC injection), and other relevant settings. The secret is then stored in the chosen secret engine.

-

Application Request: When your application needs to make a request to an external service that requires a secret (e.g., an API key), it sends the request to the Gate proxy instead of directly to the service. The request includes a special header (e.g.,

SID_Proxy_Auth) that contains the secret identifier. -

Secret Retrieval: Gate’s Tokenizer plugin intercepts the request and extracts the secret identifier from the

SID_Proxy_Authheader. It then makes a request to the secret manager to retrieve the corresponding secret using the secret identifier. -

Secret Injection: Once the Tokenizer plugin receives the decrypted secret from SlashID, it injects the secret into the request based on the specified configuration. This can involve setting a header value, computing an HMAC signature, or injecting an OAuth token.

-

Request Forwarding: After injecting the secret, Gate forwards the modified request to the destination service. The service receives the request with the required secret, processes it, and sends the response back to Gate.

-

Response Handling: Gate receives the response from the service and forwards it back to your application. The application receives the response without any knowledge of the tokenization process that occurred behind the scenes.

Request Handling in a picture

Gate configuration

The Gate configuration is straightforward as well, as a simple example:

gate:

---

plugins:

---

- id: tokenize_requests

type: tokenizer

enabled: true

parameters:

header_with_proxy_token: SID_Proxy_Auth

secret_engine: slashid

---

default:

transparent: trueIn this configuration:

- header_with_proxy_token specifies the header that contains the encrypted secret identifier.

- secret_engine is set to “slashid”, indicating that SlashID is being used as the secret engine. Currently, we support Hashicorp Vault, AWS Secret Manager, GCP Secret Manager, and Azure Key Vault.

With this configuration, Gate’s Tokenizer plugin will intercept requests, retrieve the corresponding secret from SlashID based on the secret ID in the SID_Proxy_Auth header, and inject it into the request based on the secret’s configuration.

The transparent mode for Gate means that no URL rewriting occurs and the request is simply forwarded to the remote server.

Conclusion

Through credential tokenization, you can minimize the handling of key material, enforce fine-grained access control on API keys, and make credential lifecycle management significantly easier.

Please contact us if you are interested in securing your third-party credentials.